Is AI Good or Bad? Why It's Time to Ask Better Questions

Why Simplifying AI Into 'Good' or 'Bad' Misses the Bigger Picture—and What We Should Really Be Asking

👋 Hey, it’s CJ. Welcome to my weekly newsletter, where I share insights on trends, managing up, accelerating your career, and standing out in any room.

Read time: 4 minutes

When we talk about artificial intelligence (AI), it’s tempting to paint it in broad strokes: AI is either “good” or “bad.”

But let’s be real—that binary thinking doesn’t serve us.

AI, like most technology, is complex and nuanced, and labeling it as just “good” or “bad” oversimplifies its impact on the world.

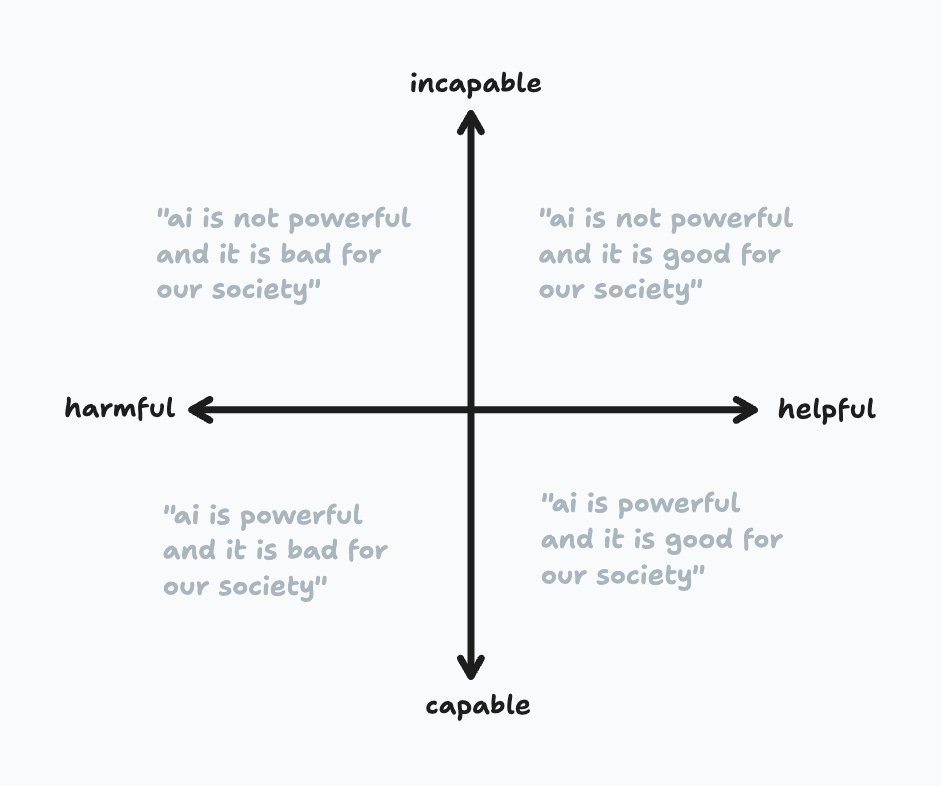

The truth is, AI isn’t inherently either. It’s a tool. And like any tool, its value is defined by how it’s used and the outcomes it generates. The real conversation we need to be having is around the implications of its usage: Is it helpful or harmful? Capable or incapable? This shift in framing is critical, especially as AI continues to evolve at breakneck speed.

Helpful vs. Harmful

Instead of asking if AI is "good" or "bad," let’s focus on whether it’s helpful or harmful. When AI is helpful, it’s solving real problems—automating mundane tasks, assisting in medical diagnoses, enhancing productivity. These are the stories we love to hear, where AI is contributing positively to society.

I use Ai to help me clarify thoughts or work on ideas on how to convey ideas in a better way. All of this is super helpful.

But AI has a harmful side, too. When bias creeps into AI algorithms, entire communities can be marginalized. Think about facial recognition systems that disproportionately misidentify people, or hiring algorithms that perpetuate gender biases. It’s not that the AI itself is malicious, but the outcomes it generates can cause harm if left unchecked. If we’re going to champion AI as a solution, we need to remain vigilant about the harm it can also cause.

Capable vs. Incapable

Another dimension to evaluate is whether AI is capable or incapable. There’s no question that AI is getting more advanced—it can play chess better than any human, analyze vast datasets in seconds, and even generate art. But that doesn’t mean it’s always capable of understanding the nuance behind the tasks it performs.

Consider chatbots. They’re great at handling routine customer service inquiries, but the moment you ask them to deal with a complex emotional issue, they falter. Their capabilities are vast but limited. As AI expands into more industries, understanding its limits is just as important as acknowledging its strengths.

Clarity is Key

The reason this shift in language—helpful/harmful, capable/incapable—matters is because it brings clarity to the conversation. When we say “AI is bad,” are we really talking about its potential for harm, or are we expressing frustration over its limitations? If AI is “good,” is that because it’s advancing technology, or because we haven’t yet seen the negative consequences of its deployment?

The clearer we are about what we mean, the better equipped we’ll be to deal with the real challenges AI presents.

The truth is, all of us are about to be completely inundated with Ai features in literally everything we use. I was in a meeting recently and someone mentioned that their new washer machine uses Ai. We laughed about it.

The Takeaway

AI isn’t a one-size-fits-all solution, and it shouldn’t be seen as such. It can be both helpful and harmful, capable and incapable—sometimes simultaneously.

The key is to acknowledge that duality and move beyond simplistic labels. Whether I’m working with companies or churches, meaningful discussions about this evolving technology require a more nuanced perspective. Without this, we can’t fully grasp its implications, dangers or opportunities.

Let’s stop asking if AI is “good” or “bad” and start asking better questions:

How can we make AI more helpful, less harmful, and more capable of serving us all?